In this post, we'll talk a bit about the usual proofs about the worst-case query complexity of sorting (at least, in the deterministic case) and then use a beautiful (and surprisingly simple!) tool from computational lower bounds to give a very general argument about the construction

The usual approach

There are many notes and posts talking about the fact that sorting will, in the worst case, always take comparisons; equivalently, in the worst case, the number of comparisons is about a constant factor away from when is large. In many cases, the proof presented depends on the fact that the sorting algorithm is deterministic and goes a little like this (see here for a common case):

Preliminaries

Let be the th step of a deterministic sorting algorithm (this can be represented, for example, as a tuple containing what the next comparison should be) with input where is a list of comparable elements. (For example, can be a list of real numbers, in which case .)

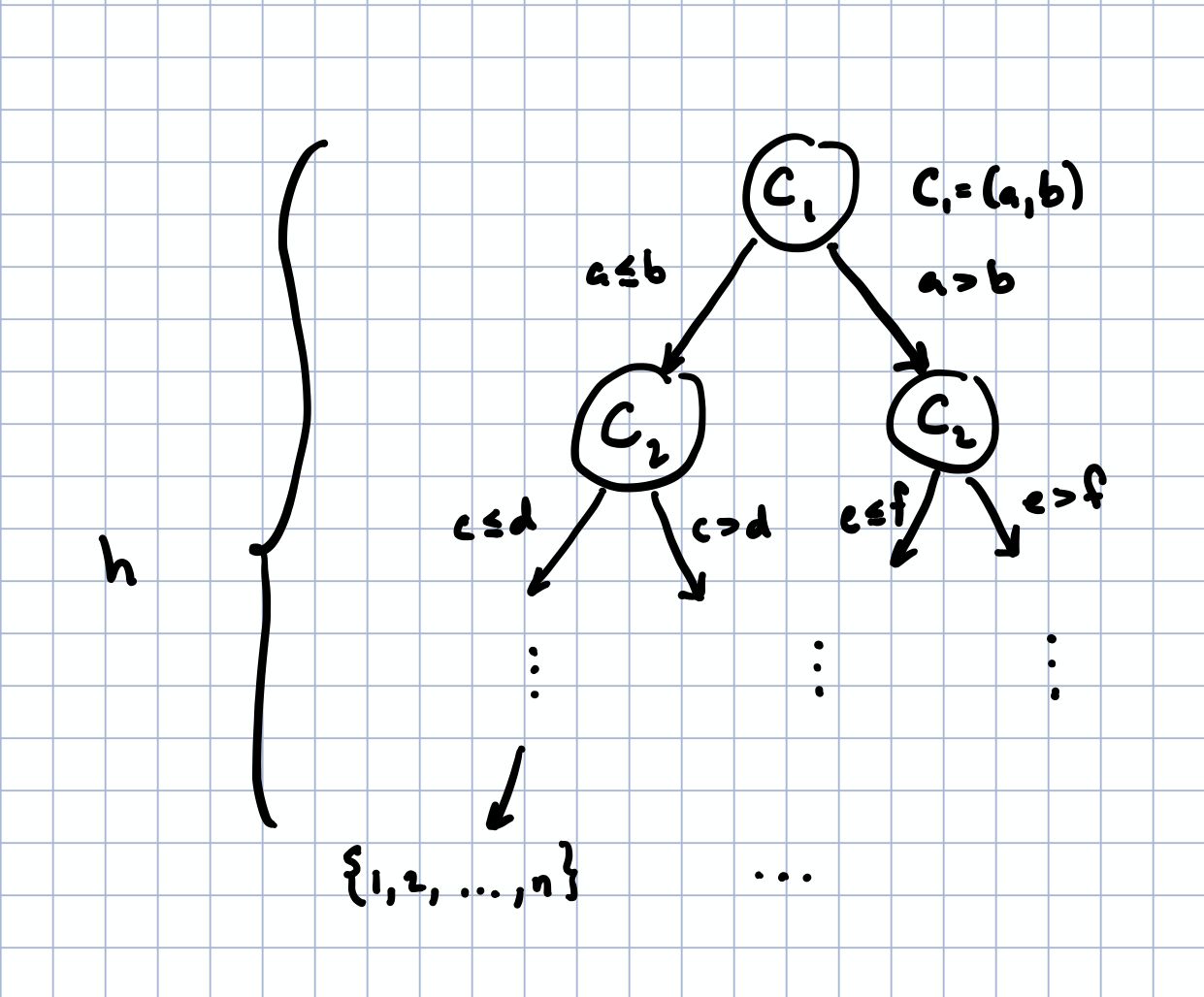

By the definition of a deterministic algorithm, depends only on the past comparisons; i.e., . (I am slightly overloading notation here, of course, but the meaning should be clear.) This means that we can view the behavior of the algorithm as a tree, where is a child node of which itself is a child node of , etc. Additionally, the tree is binary since the output of a comparison is only one of two possibilities (if , then either or ).

Finally, let the leaf nodes of this tree be the list of indices (say, , where each entry is an index, for ) such that the list permuted at these indices is sorted, . Note that the number of nodes needed to get from the root node to a given leaf node (or permutation) is exactly the number of comparisons that the algorithm makes before it returns a specific permutation. If we can show that the height (the length of the longest path from root to leaf) of the tree is always larger than about , then we've shown that this algorithm must take at least steps.

Bound on the height

The idea for the bound is pretty simple: since this algorithm is a sorting algorithm and it can receive any unsorted list, then each of the possible permutations must be a leaf node of the tree. (Why?) Additionally, the maximum number of leaves for a binary tree of height is , which, in turn, implies that we must have . Taking the log of both sides shows that:

which is exactly what we wanted to show. (The second equality is shown, for example, in the original reference for this proof; see page 2.)

Some takeaways from this proof

To prove this statement we only really used a few things: (a) the algorithm has to decide between things, and (b) at each query, it only receives a "yes" or a "no" (as we will soon make rigorous, it only gains 1 bit of information from each query). The rest of the proof simply sets up scaffolding for the remaining parts, most of which is really somewhat orthogonal to our intuition. The point is: look, we have things we have to decide on and every time we ask a question, we cut down our list of possible true answers by about half. How many times do we need to cut down our list to be able to have exactly one possible answer? (Of course, as we showed before, this should be .)

Now, a good number of writings (and some textbooks) I've seen simply end here by saying "the algorithm gains at most 1 bit for every query and we need at least bits" and then give some vague citation to Shannon's theorem about communication without explicitly writing out any argument. (While there is some connection, it's unclear what it is or how to even make it rigorous in a general sense.) It's a bit of a shame since it doesn't really take much math to fully justify this intuitive statement, as we will see next.

An information-theoretic approach

The idea behind a (simple) information theoretic approach is to view the algorithm as attempting to 'uncover' the true, sorted permutation by querying an oracle that knows whether two elements are in sorted order or not. Here, the oracle gets to choose some 'true' permutation uniformly at random and then the sorting algorithm queries the oracle in a restricted sense: it can only ask yes or no questions about the list.

More formally, we will let be the set of all possible permutations of through and let the oracle's permutation such that is uniformly randomly sampled from . Then, the algorithm gets to ask a sequence of yes/no queries for which are dependent on the permutation , and, at the end of queries, must respond with some 'guess' for the true permutation , which is a random variable that depends only on .

We can represent the current set up as a Markov chain , since is conditionally independent of given (i.e., the algorithm can only use information given by ) while the random variable depends only on . The idea then is to give a bound on the number of queries required for an algorithm to succeed with probability 1.

To give a lower bound on this quantity, we'll use a tool from information theory called Fano's inequality, which, surprisingly, I don't often see taught in information theory courses. (Perhaps I haven't been taking the right ones!)

Fano's inequality

I learned about this lovely inequality in John Duchi's class, EE377: Information Theory and Statistics (the lectures notes for the class are here). Its proof really makes it clear why entropy, as defined by Shannon, is pretty much the exact right quantity to look at. We'll explore a weaker version of it here that is simpler to prove and requires fewer definitions but which will suffice for our purposes.

We will use as random variables and set as the entropy, defined:

where is the space of values that can take on. The conditional entropy of given is defined as

As usual, the entropy is a measure of the 'uncertainty' in the variable , with the maximally uncertain distribution being the uniform one.[1] Additionally, note that is the entropy taken with respect to the joint distribution of and . Finally, if is a deterministic function of , then which follows from the definition.

For this post, we will only make use of the following three properties of the entropy (I will not prove them here, as there are many available proofs of them, including the notes above and Cover's famous Elements of Information Theory):

The entropy can only decrease when removing variables, .

The entropy is smaller than the log of the size of the sample space . (Equivalently, conditioning reduces entropy, and the uniform distribution on has the highest possible entropy, .)

If a random variable is conditionally independent of given (i.e., if is a Markov chain), then . This is often called a data processing inequality, which simply says that has smaller entropy knowing than knowing a variable, , that has undergone further processing. In other words, you cannot gain more information about from a variable by further processing .

This is all we need to prove the following inequality. Let be a Markov chain such that is conditionally independent of given and is uniformly drawn from , then the probability that is given by and satisfies

where is the number of binary queries made and is the number of elements in .

A quick aside

Proving this inequality is enough to show the claim. Note that, we want the probability of error to be 0 (since we want our algorithm to work!) so

and, since is the space of possible permutations of size , (of which there are of) then and then must satisfy

In other words, the number of queries (or comparisons, or whatever) must be approximately at least as large as , asymptotically (up to constant multiples). In fact, this proves a slightly stronger statement that no probabilistic algorithm can succeed with nonvanishing probability if the number of queries is not on the order of , which our original proof above does not cover!

Now, the last missing piece is showing that the probability of error is bounded from below.

A proof of Fano's inequality

This is a slightly simplified version of the proof presented in Duchi's notes (see section 2.3.2) for the specific case we care about, which requires less additional definitions and supporting statements. Let be the 'error' random variable that is if and 0 otherwise, then let's look at the quantity :

by our definition of conditional entropy, and

again by the same definition. Since whenever there is no error, , then since is known, so, we really have

Since can only take on two values, we have that and we also have that , which gives

Now, we have that

The first inequality follows from the fact that we're removing a variable and the second follows from statement 3 in the previous section (as ). Using the definition of , then we have

The first inequality here follows since we're (again!) removing a variable and the equality follows from the fact that is uniformly randomly drawn from and the last inequality follows from the fact that the entropy of is always smaller than that of the uniform distribution on . Finally, note that, if we have queries, then (this is the number of possible values a sequence of binary queries can take on). So, (in other words, the maximum amount of information we can get with binary queries is bits) so we find

or, after some slight rewriting:

as required!

Some discussion

I think overall that Fano's inequality is a relatively straightforward way of justifying a ton of the statements one can make about information theory without needing to invoke a large number of more complicated notions. Additionally, the proof is relatively straightforward (in the sense that it only requires very few definitions and properties of the entropy) while also matching our intuition about these problems pretty much exactly.

In particular, we see that sorting is hard not because somehow ordering elements is difficult, but because we have to decide between a bunch of different items (in fact, of them) while only receiving a few bits of information at any point in time! In fact, this bound applies to any yes/no query that one can make of the sorted data, not just comparisons, which is interesting.

There are some even more powerful generalizations of Fano's inequality which can also be extended to machine learning applications: you can use them to show that, given only some small amount of data, you cannot decide between a parameter that correctly describe the data and one that does not.

This is all to say that, even though entropy is a magical quantity, that doesn't mean we can't say very rigorous things about it (and make our intuitions about lower bounds even more rigorous, to boot!).

| [1] | In fact, the entropy is really a measure of how close a variable is to the uniform distribution, in the case of compact domains —the higher the entropy, the closer it is. |